Biography

Zhang Qi is a first-year PhD student in the computer vision group at UvA, researching the optimization of the tracking module for Monocular Gaussian SLAM under the joint supervision of Dr. Martin R. Oswald, Dr. Sezer Karaoglu, and Prof. Theo Gevers. Occasionally, I do interesting things with Prof. Arnoud about real-world robots.

During the master’s studies at the University of Glasgow, he is working on optimizing monocular vSLAM in dynamic environments for his final year project under the supervision of Prof. Paul Siebert, alongside collaborating on the Camera-Assisted Algorithm for Autonomous Exploration Aiding the Visually Impaired in dynamic environments with Dr. Zhihao Lin and Dr. Zhen Tian under the supervision of Dr. Jianglin Lan in Glasgow. Outside his research work, he is an outstanding beatbox performer and an amateur long-distance runner (10km/40min).

!!!

More collaborations are needed (vision-based navigation, joint position estimation in dynamic environments, etc.) Please contact me directly at

!!!

Reviewer for IROS 2024, Measurement

- Visual SLAM

- Indoor Localization

- Multi-Object Tracking

MSc in Data Science, 2022

University Of Glasgow

Skills

90%

90%

100%

100%

80%

100%

Accomplishments

Projects

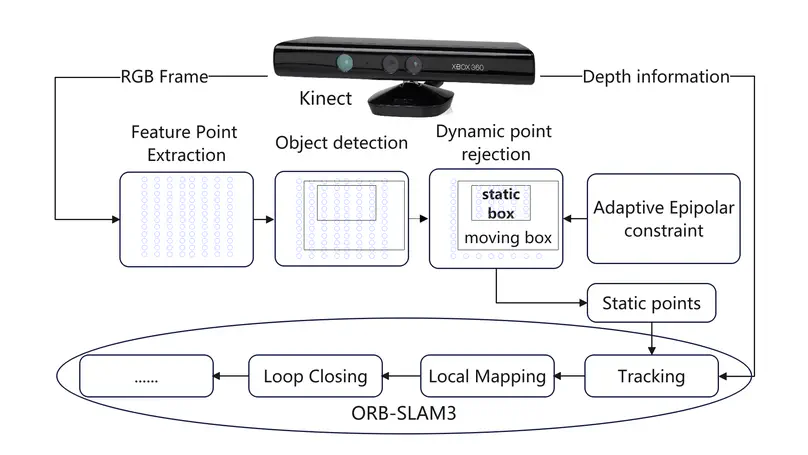

• Method overview:

The semantic module only combines the ORB-SLAM3 and YOLOX-s

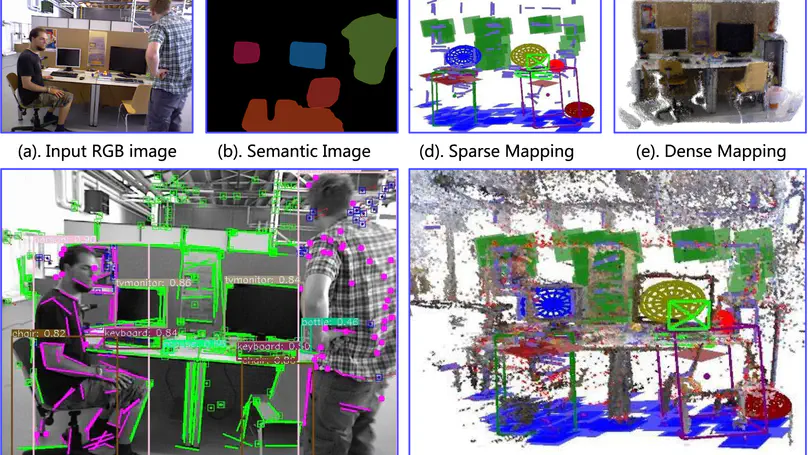

The mapping module refers to a system for semantic Labelling 3D Point Cloud in vSLAM

- X. Qi, S. Yang, and Y. Yan, Deep Learning Based Semantic Labelling of 3D Point Cloud in Visual SLAM, 10 2018, vol. 428, p. 012023.

• Open source work: The work is open source on Github and presented on Bili (8000+v).

Featured Publications

Traditional visual Simultaneous Localization and Mapping (SLAM) methods based on point features are often limited by strong static assumptions and texture information, resulting in inaccurate camera pose estimation and object localization.To address these challenges, we present SLAM , a novel semantic RGB-D SLAM system that can obtain accurate estimation of the camera pose and the 6DOF pose of other objects, resulting in complete and clean static 3D model mapping in dynamic environments. Our system makes full use of the point, line, and plane features in space to enhance the camera pose estimation accuracy. It combines the traditional geometric method with a deep learning method to detect both known and unknown dynamic objects in the scene. Moreover, our system is designed with a three-mode mapping method, including dense, semi-dense, and sparse, where the mode can be selected according to the needs of different tasks. This makes our visual SLAM system applicable to diverse application areas. Evaluation in the TUM RGB-D and Bonn RGB-D datasets demonstrates that our SLAM system achieves the most advanced localization accuracy and the cleanest static 3D mapping of the scene in dynamic environments, compared to state-of-the-art methods. Specifically, our system achieves a root mean square error (RMSE) of 0.018 m in the highly dynamic TUM w/half sequence, outperforming ORB-SLAM3 (0.231 m) and DRG-SLAM (0.025 m). In the Bonn dataset, our system demonstrates superior performance in 14 out of 18 sequences, with an average RMSE reduction of 27.3% compared to the next best method.

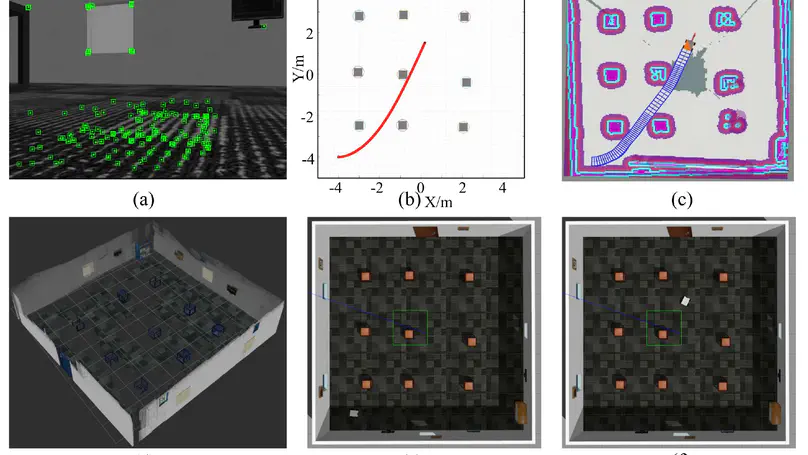

The paper presents a vision-based obstacle avoidance strategy for lightweight self-driving cars that can be run on a CPU-only device using a single RGB-D camera. The method consists of two steps: visual perception and path planning. The visual perception part uses ORBSLAM3 enhanced with optical flow to estimate the car’s poses and extract rich texture information from the scene. In the path planning phase, the proposed method employs a method combining a control Lyapunov function and control barrier function in the form of a quadratic program (CLF-CBF-QP) together with an obstacle shape reconstruction process (SRP) to plan safe and stable trajectories. To validate the performance and robustness of the proposed method, simulation experiments were conducted with a car in various complex indoor environments using the Gazebo simulation environment. The proposed method can effectively avoid obstacles in the scenes. The proposed algorithm outperforms benchmark algorithms in achieving more stable and shorter trajectories across multiple simulated scenes.

Recent Publications

Contact

I welcome exchanges and collaborations with fellow scholars. Undergraduate students are also welcome to work together on projects. Please feel free to contact me.

- zhangqi_research@163.com

- 8:00 to 18:00