Semantic SLAM for mobile robots in dynamic environments based on visual camera sensors

Abstract

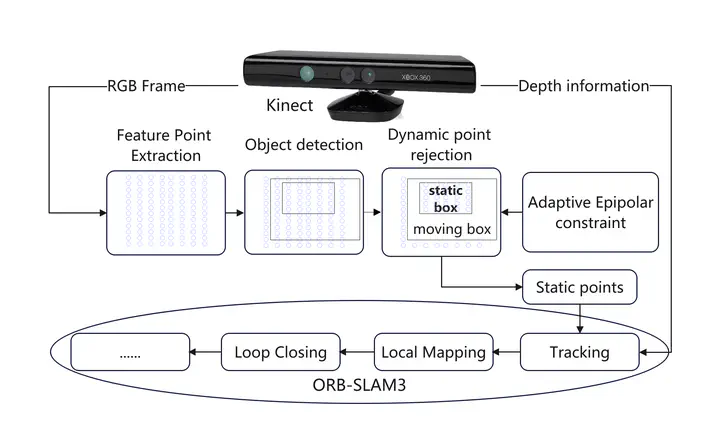

Visual simultaneous localization and mapping (vSLAM) is inherently constrained by the static world assumption, which renders success in the presence of dynamic objects rather challenging. In this paper, we propose a real-time semantic vSLAM system designed for both indoor and outdoor dynamic environments. By employing object detection, we identify 80 categories and utilize motion consistency checks to pinpoint outliers in each image. Distinct methods are presented for examining the motion states of humans and other objects. For detected humans, an algorithm is introduced to assess whether an individual is seated, subsequently dividing the bounding boxes of seated individuals into two parts based on human body proportions. We then use the same threshold values for standing individuals to determine the states of the two boxes belonging to seated individuals. For non-human objects, we propose an algorithm capable of automatically adjusting the threshold values for different bounding boxes, thereby ensuring consistent detection performance across various objects. Ultimately, we retain points within static boxes contained in dynamic boxes while eliminating other points in dynamic boxes to benefit from a larger number of detected categories. Our SLAM is evaluated on indoor TUM and Bonn RGB-D datasets, with further testing conducted on the outdoor stereo KITTI dataset. The results reveal that our SLAM outperforms most SLAM systems in dynamic environments. Moreover, we test our system in real-world environments with a monocular camera, demonstrating its robustness and universality across diverse settings.